Gilt nicht nur in den USA. Fahrradfahrende kennen das. „Freiheit muss mit Benzin angetrieben werden.“

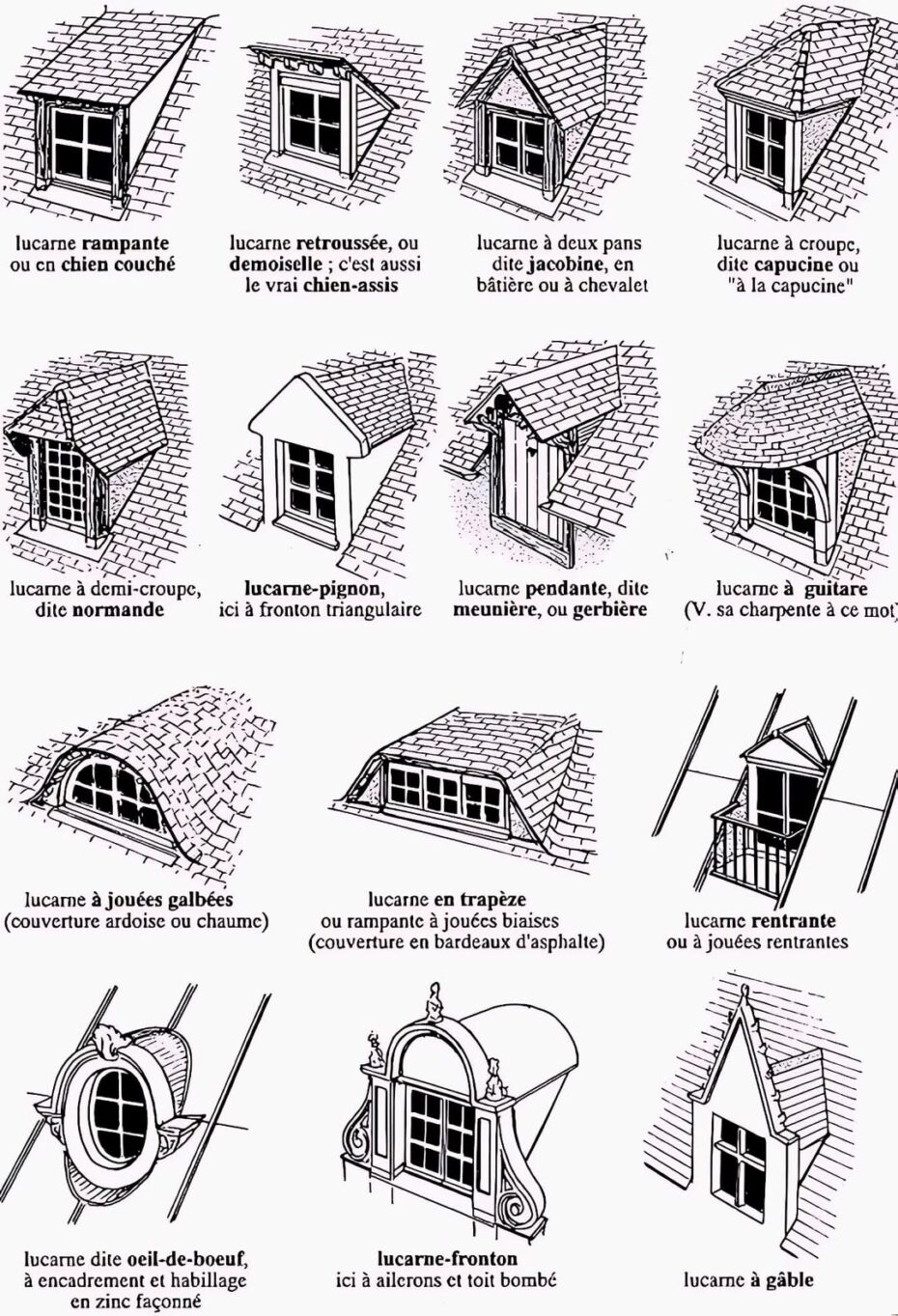

Milde interessant: verschiedene Arten von Dachgauben. Bisher nie darüber nachgedacht, aber ja, die sehen ja nicht alle gleich aus. Hier ausführlicher erklärt. Wieder was gelernt.

(via Messy Nessy)

ChatGPT suffered a worldwide outage from 06:36 UTC Tuesday morning. The servers weren’t totally down, but queries kept returning errors. OpenAI finally got it mostly fixed later in the day. [OpenAI, archive]

But you could hear the screams of the vibe coders, the marketers, and the LinkedIn posters around the world. The Drum even ran a piece about marketing teams grinding to a halt because their lying chatbot called in sick. [Drum]

There’s some market in the enterprise for AI slop generators. Wrong text summaries, LLM search engines with data leaks and privacy violations, and rambling slop emails.

But the enterprise chatbot market is jobs and tasks that are substantially … fake. Where it doesn’t matter if it’s wrong. The market for business chatbots is “we pretend to work and they pretend to pay us.”

So what do you do in your chatbot-dependent job next time OpenAI goes down?

The AI bubble is not sustainable. It’s going to pop. And it’s not clear what it would cost to serve GPT without the billions in venture capital dumb money — if it had to pay its way as a for-profit service.

Ed Zitron estimated in October 2024 that OpenAI was spending $2.35 for each $1 of revenue — so prices would triple at absolute minimum. I expect much more than that, because OpenAI is spending money even faster than it was a year ago. It’ll have to go to five times the current price, maybe ten times. [Ed Zitron]

DeepSeek claims its prices, which undercut OpenAI, could turn a profit. But even they call this “theoretical.” [TechCrunch]

Tedious nerds are right now vying to comment on this post “oh you could run an open source model in-house.”

- So firstly, shut up, nerd. You’ve clearly never worked a corporate job and don’t understand the compulsion to in-house nothing.

- Secondly, approximately 0.0% of chatbot users will ever run an LLM at home.

- Thirdly, it’s slow as hell even on a top-end home Nvidia card — and the data centre cards are expensive.

- Fourthly, asking around, the few companies that do build their own in-house LLM systems pay thousands to tens of thousands per month to serve a few users with the same performance that most ChatGPT users just buy in as a service. [Mastodon]

How desperate is your boss to pretend he can replace you with a chatbot pretending to do a job? Will he pay five or ten times the price?

Anyway, I’m sure it’ll be fine and you can use your chatbot in confidence! Until you can’t.

Midjourney runs a diffusion model that you can ask to generate pictures. Disney and Universal and several other movie studios have sued because Midjourney keeps spitting out their copyrighted characters. [Complaint, PDF; case docket]

This is the first suit by big Hollywood movie studios against a generative AI company. Disney first went for one of the mid-range gen-AI companies, not a giant one. I expect they think they can get a precedent here.

The suit is not about particular copyrighted works, but copyright in the *characters*. The legal precedent on character copyright is solid.

The filing contains a whole pile of images giving an official character image next to what Midjourney gave them when they prompted it for that character. Or not even naming a character – the prompt “Superhero fight scene” gave a picture of Spider-Man fighting another Spider-Man.

Midjourney also spat out images that were really obviously taken straight from its training data, like stills from Avengers Infinity War.

Disney and Universal first contacted Midjourney about this a year ago.

Disney know how generative AI works. The Disney content can’t be removed from Midjourney without retraining it from scratch.

Midjourney is also odd in that it didn’t take money from outside investors and it’s actually profitable selling monthly subscriptions. This is an AI company that is not a venture capital money bonfire, it’s an actual business.

I suspect Disney isn’t out to just shut Midjourney down. Disney’s goal is to gouge Midjourney for a settlement and a license.

A pile of patient data that was sent to NHS England from doctors to research Covid-19 just happened to get poured into the NHS’s new LLM, Foresight. The British Medical Association and the Royal College of General Practitioners have referred NHS England to the Information Commissioner’s Office: [RCGP; Politico]

The methodology appears to be new, contentious, and potentially with wide repercussions. It appears unlikely that a proposal would have been supported without additional, extraordinary agreements to permit it. The self-declared scope of this project appears inconsistent with the legal basis under which these data were to be used.

Foresight wants to use anonymised data from everyone in England to produce exciting new insights into health. It runs in-house at University College London on a copy of Facebook’s Llama 2. [UCL]

The British public is overwhelmingly supportive of good use of health data. But they also worry about their data being sent off to private companies like Palantir. [Understanding Patient Data; Pharmacy Business; Guardian, 2023]

And, of course, there’s not really such a thing as anonymised data. It’s notoriously easy to de-anonymise a data set. NHS England is mostly using “anonymised” as an excuse to get around data protection issues. [New Scientist, archive]

NHS England says it’s “paused” data collection and launched an internal audit of the project. They insist that taking care of it themselves is fine and they don’t need some outsiders — like, say, the data protection authority for the UK — sticking their noses in. The investigation proceeds.