Click here to go see the bonus panel!

Hovertext:

I feel like I could at least respect if political leaders would knock down legal barriers with a folding chair, instead of just ignoring them.

Today's News:

Hovertext:

I feel like I could at least respect if political leaders would knock down legal barriers with a folding chair, instead of just ignoring them.

Hovertext:

The weirdest part is him doing this after changing all of science forever.

Hovertext:

I need to do a book entirely about google scholar misspellings.

As Israel and Iran trade blows in a quickly escalating conflict that risks engulfing the rest of the region as well as a more direct confrontation between Iran and the U.S., social media is being flooded with AI-generated media that claims to show the devastation, but is fake.

The fake videos and images show how generative AI has already become a staple of modern conflict. On one end, AI-generated content of unknown origin is filling the void created by state-sanctioned media blackouts with misinformation, and on the other end, the leaders of these countries are sharing AI-generated slop to spread the oldest forms of xenophobia and propaganda.

If you want to follow a war as it’s happening, it’s easier than ever. Telegram channels post live streams of bombing raids as they happen and much of the footage trickles up to X, TikTok, and other social media platforms. There’s more footage of conflict than there’s ever been, but a lot of it is fake.

A few days ago, Iranian news outlets reported that Iran’s military had shot down three F-35s. Israel denied it happened. As the claim spread so did supposed images of the downed jet. In one, a massive version of the jet smolders on the ground next to a town. The cockpit dwarfs the nearby buildings and tiny people mill around the downed jet like Lilliputians surrounding Gulliver.

It’s a fake, an obvious one, but thousands of people shared it online. Another image of the supposedly downed jet showed it crashed in a field somewhere in the middle of the night. Its wings were gone and its afterburner still glowed hot. This was also a fake.

AI slop is not the sole domain of anonymous amateur and professional propagandists. The leaders of both Iran and Israel are doing it too. The Supreme Leader of Iran is posting AI-generated missile launches on his X account, a match for similar grotesques on the account of Israel’s Minister of Defense.

New tools like Google’s Veo 3 make AI-generated videos more realistic than ever. Iranian news outlet Tehran Times shared a video to X that it said captured “the moment an Iranian missile hit a building in Bat Yam, southern Tel Aviv.” The video was fake. In another that appeared to come from a TV news spot, a massive missile moved down a long concrete hallway. It’s also clearly AI-generated, and still shows the watermark in the bottom right corner for Veo.

#BREAKING

— Tehran Times (@TehranTimes79) June 14, 2025

Doomsday in Tel Aviv pic.twitter.com/5CDSUDcTY0

After Iran launched a strike on Israel, Tehran Times shared footage of what it claimed was “Doomsday in Tel Aviv.” A drone shot rotated through scenes of destroyed buildings and piles of rubble. Like the other videos, it was an AI generated fake that appeared on both a Telegram account and TikTok channel named “3amelyonn.”

In Arabic, 3amelyonn’s TikTok channel calls itself “Artificial Intelligence Resistance” but has no such label on Telegram. It’s been posting on Telegram since 2023 and its first TikTok video appeared in April of 2025, of an AI-generated tour through Lebanon, showing its various cities as smoking ruins. It’s full of the quivering lines and other hallucinations typical of early AI video.

But 3amelyonn’s videos a month later are more convincing. A video posted on June 5, labeled as Ben Gurion Airport, shows bombed out buildings and destroyed airplanes. It’s been viewed more than 2 million times. The video of a destroyed Tel Aviv, the one that made it on to Tehran Times, has been viewed more than 11 million times and was posted on May 27, weeks before the current conflict.

Hany Farid, a UC Berkeley professor and founder of GetReal, a synthetic media detection company, has been collecting these fake videos and debunking them.

“In just the last 12 hours, we at GetReal have been seeing a slew of fake videos surrounding the recent conflict between Israel and Iran. We have been able to link each of these visually compelling videos to Veo 3,” he said in a post on LinkedIn. “It is no surprise that as generative-AI tools continue to improve in photo-realism, they are being misused to spread misinformation and sow confusion.”

The spread of AI-generated media about this conflict appears to be particularly bad because both Iran and Israel are asking their citizens not to share media of destruction, which may help the other side with its targeting for future attacks. On Saturday, for example, the Israel Defense Force asked people not to “publish and share the location or documentation of strikes. The enemy follows these documentations in order to improve its targeting abilities. Be responsible—do not share locations on the web!” Users on social media then fill this vacuum with AI-generated media.

“The casualty in this AI war [is] the truth,” Farid told 404 Media. “By muddying the waters with AI slop, any side can now claim that any other videos showing, for example, a successful strike or human rights violations are fake. Finding the truth at times of conflict has always been difficult, and now in the age of AI and social media, it is even more difficult.”

“We're committed to developing AI responsibly and we have clear policies to protect users from harm and governing the use of our AI tools,” a Google spokesperson told 404 Media. “Any content generated with Google AI has a SynthID watermark embedded and we add a visible watermark to Veo videos too.”

Farid and his team used SynthID to identify the fake videos “alongside other forensic techniques that we have developed over at GetReal,” he said. But checking a video for a SynthID watermark, which is visually imperceptible, requires someone to take the time to download the video and upload it to a separate website. Casual social media scrollers are not taking the time to verify a video they’re seeing by sending it to the SynthID website.

One distinguishing feature of 3amelyonn and others’ videos of viral AI slop about the conflict is that the destruction is confined to buildings. There are no humans and no blood in 3amelyonn’s aerial shots of destruction, which are more likely to get blocked both by AI image and video generators as well as the social media platforms where these creations are shared. If a human does appear, they’re as observers like in the F-35 picture or milling soldiers like the tunnel video. Seeing a soldier in active combat or a wounded person is rare.

There’s no shortage of real, horrifying footage from Gaza and other conflicts around the world. AI war spam, however, is almost always bloodless. A year ago, the AI-generated image “All Eyes on Raffah” garnered tens of millions of views. It was created by a Facebook group with the goal of “Making AI prosper.”

A California police department searched AI-enabled, automatic license plate reader (ALPR) cameras in relation to an “immigration protest,” according to internal police data obtained by 404 Media. The data also shows that police departments and sheriff offices around the country have repeatedly tapped into the cameras inside California, made by a company called Flock, on behalf of Immigration and Customs Enforcement (ICE), digitally reaching into the sanctuary state in a data sharing practice that experts say is illegal.

Flock allows participating agencies to search not only cameras in their jurisdiction or state, but nationwide, meaning that local police that may work directly with ICE on immigration enforcement are able to search cameras inside California or other states. But this data sharing is only possible because California agencies have opted-in to sharing it with agencies in other states, making them legally responsible for the data sharing.

The news raises questions about whether California agencies are enforcing the law on their own data sharing practices, threatens to undermine the state’s perception as a sanctuary state, and highlights the sort of surveillance or investigative tools law enforcement may deploy at immigration related protests. Over the weekend, millions of people attended No Kings protests across the U.S. 404 Media’s findings come after we revealed police were searching cameras in Illinois on behalf of ICE, and then Cal Matters found local law enforcement agencies in California were searching cameras for ICE too.

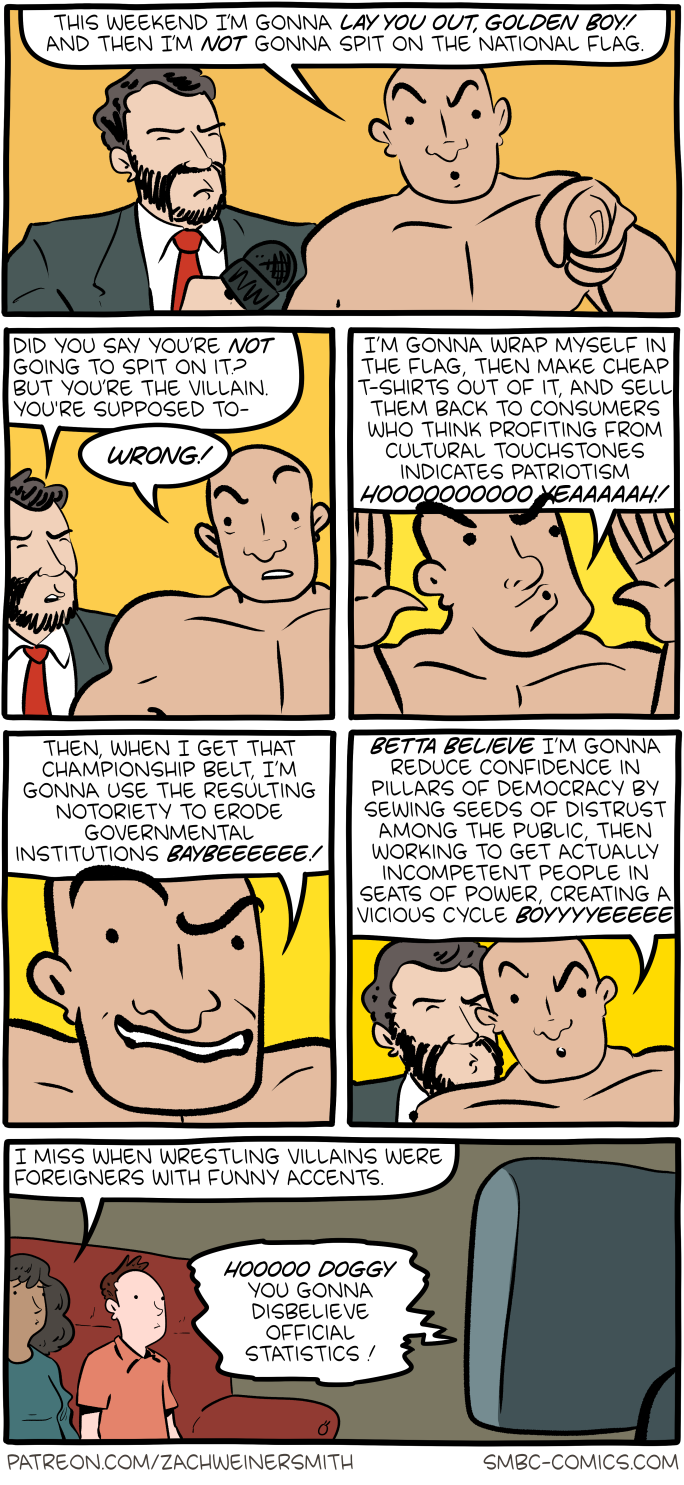

On Monday, federal and state authorities charged Vance Boelter with the murders of Minnesota Rep. Melissa Hortman and her husband. An affidavit written by an FBI Special Agent, published here by MSNBC, includes photos of a notepad found in Boelter’s SUV which included a long list of people search sites, some of which make it very easy for essentially anyone to find the address and other personal information of someone else in the U.S. The SUV contained other notebooks and some pages included the names of more than 45 Minnesota state and federal public officials, including Hortman, the affidavit says. Hortman’s home address was listed next to her name, it adds.

People search sites can present a risk to citizen’s privacy, and, depending on the context, physical safety. They aggregate data from property records, social media, marriage licenses, and other places and make it accessible to even those with no tech savvy. Some are free, some are paid, and some require a user to tick a box confirming they’re only using the data for certain permitted use cases.

Congress has known about the risk of data for decades. In 1994 lawmakers created the Driver’s Privacy Protection Act (DPPA) after a stalker hired a private investigator who then obtained the address of actress Rebecca Schaeffer from a DMV. The stalker then murdered Schaeffer. With people search sites, though, lawmakers have been largely motionless, despite them existing for years, on the open web, accessible by a Google search and sometimes even promoted with Google advertisements.

Senator Ron Wyden said in a statement “The accused Minneapolis assassin allegedly used data brokers as a key part of his plot to track down and murder Democratic lawmakers. Congress doesn't need any more proof that people are being killed based on data for sale to anyone with a credit card. Every single American's safety is at risk until Congress cracks down on this sleazy industry.”

This notepad does not necessarily mean that Boelter used these specific sites to find Hortman’s or other officials’ addresses. As the New York Times noted, Hortman’s address was on her campaign website, and Minnesota State Senator John Hoffman, who Boelter allegedly shot along with Hoffman’s wife, listed his address on his official legislative webpage.

The sites’ inclusion shows they are of high interest to a person who allegedly murdered and targeted multiple officials and their families in an act of political violence. Next to some of the people search site names, Boelter appears to have put a star or tick.

Those people search sites are:

A spokesperson for Atlas, a company that is suing a variety of people search sites, said “Tragedies like this might be prevented if data brokers simply complied with state and federal privacy laws. Our company has been in court for more than 15 months litigating against each of the eleven data brokers identified in the alleged shooter’s writings, seeking to hold them accountable for refusing to comply with New Jersey’s Daniel’s Law which seeks to protect the home addresses of judges, prosecutors, law enforcement and their families. This industry’s purposeful refusal to comply with privacy laws has and continues to endanger thousands of public servants and their families.”

404 Media has repeatedly reported on how data can be weaponized against people. We found violent criminals and hackers were able to dox nearly anyone in the U.S. for $15, using bots that were based on data people had given as part of opening credit cards. In 2023 Verizon gave sensitive information, including an address on file, of one of its customers to her stalker, who then drove to the address armed with a knife.

404 Media was able to contact most of the people search sites for comment. None responded.

Update: this piece has been updated to include a statement from Atlas. An earlier version of this piece accidentally published a version with a different structure; this correct version includes more information about the DPPA.