Click here to go see the bonus panel!

Hovertext:

I wonder if you could trick people into paying more in taxes by calling it traditional crowdfunding?

Today's News:

Hovertext:

I wonder if you could trick people into paying more in taxes by calling it traditional crowdfunding?

Copilot is a collection of security holes. In the latest, Copilot was summarising any email in your sent items or drafts — including emails with confidentiality labels. This was reported in January. Microsoft says it’s fixed as of … three days ago. [Bleeping Computer; NHS]

Last year, you could tell Copilot not to log accesses to sensitive files. If you told Copilot to summarise the file but not to give you a link … it didn’t put the access in the audit log! [blog post]

Zack Korman from Pistachio reported this to Microsoft in July 2025. But Michael Bargury from Zenity had talked about the hole at Blackhat in August 2024. Microsoft just didn’t fix it for a year! [YouTube, 11:01 on]

But Copilot’s worth it for workplace efficiency, right? The UK Department for Business and Trade measured Copilot. Civil servants saved about 26 minutes a day — with no evidence of increased productivity.

The Department for Work and Pensions ran their own Copilot trial. They only saved 19 minutes a day. Copilot is still in place across UK government. [Gov.UK]

But this is enterprise software-as-a-service! Making the sale means winning hearts and minds!

Microsoft is paying influencers to say Copilot isn’t awful garbage that makes work miserable by, e.g., “posting an Instagram video about fun things to do with Microsoft Copilot.” [CNBC]

Microsoft and Google are spending $400,000–$600,000 per influencer.

So if you see someone promoting the worst slop generator you’ve ever had to use, wish them well for the cheque clearing, and hit unsubscribe.

It’s pledge week at Pivot to AI! If you enjoyed this post, and our other posts, please do put $5 into the Patreon. It helps us keep Pivot coming out daily. Thank you all.

For months, the hottest will-they/won't-they drama in Hollywood concerned the suitors for Warners, up for sale again after being bought, merged, looted and wrecked by the eminently guillotineable David Zaslav:

https://www.youtube.com/watch?v=izC9o3LhnVk

From the start, it was clear that Warners would be sucked dry and discarded, but the Trump 2024 election turned the looting of Warners' corpse into a high-stakes political drama.

On the one hand, you had Netflix, who wanted to buy Warners and use them to make good movies, but also to kill off movie theaters forever by blocking theatrical distribution of Warners' products.

On the other hand, you had Paramount, owned by the spray-tan cured tech billionaire jerky Larry Ellison, though everyone is supposed to pretend that Ellison's do-nothing/know-nothing/amounts-to-nothing son Billy (or whatever who cares) Ellison is running the show.

Ellison's plan was to buy Warners and fold it into the oligarchic media capture project that's seen Ellison replace the head of CBS with the tedious mediocrity Bari Weiss:

https://www.wnycstudios.org/podcasts/otm/articles/the-centurylong-capture-of-us-media

This is a multi-pronged media takeover that includes Jeff Bezos neutering the Washington Post, Elon Musk turning Twitter into a Nazi bar, and Trump stealing Tiktok and giving it to Larry Ellison. If Ellison gains control over Warners, you can add CNN to the nonsense factory.

But for a while there, it looked like the Ellisons would lose the bidding. Little Timmy (or whatever who cares) Ellison only has whatever money his dad parks in his bank account for tax purposes, and Larry Ellison is so mired in debt that one margin call could cost him his company, his fighter jet, and his Hawaiian version of Little St James Island.

Warners' board may not give a shit about making good media or telling the truth or staving off fascism, but they do want to get paid, and Netflix has money in the bank, whereas Ellison only has the bank's money (for now).

But last week, the dam broke: Warners' board indicated they'd take Paramount's offer, and Netflix withdrew their offer, and so that's that, right? It's not like Trump's FTC is going to actually block this radioactively illegal merger, despite the catastrophic corporate consolidation that would result, with terrible consequences for workers, audiences, theaters, cable operators and the entire supply chain.

Not so fast! The Clayton Act – which bars this kind of merger – is designed to be enforced by the feds, state governments, and private parties. That means that California AG Rob Bonta can step in to block this merger, which he's getting ready to do:

https://prospect.org/2026/02/27/states-can-block-paramount-warner-deal/

As David Dayen writes in The American Prospect, state AGs block mergers all the time, even when the feds decline to step in – just a couple years ago, Washington state killed the Kroger/Albertsons merger.

The fact that antitrust laws can be enforced at the state level is a genius piece of policy design. As the old joke goes, "AG" stands for "aspiring governor," and the fact that state AGs can step in to rescue their voters from do-nothing political hacks in Washington is catnip for our nation's attorneys general.

Bonta is definitely feeling his oats: he's also going after Amazon for price-fixing, picking up a cause that Trump dropped after Jeff Bezos ordered the Washington Post to cancel its endorsement of Kamala Harris, paid a million bucks to sit on the inaugural dais, millions more to fund the White House Epstein Memorial Ballroom and $40m more to make an unwatchable turkey of a movie about Melania Trump.

Can you imagine how stupid Bezos is going to feel when all of his bribes to Trump cash out to nothing after Rob Bonta publishes Amazon's damning internal memos and then fines the company a gazillion dollars?

It's a testament to the power of designing laws so they can be enforced by multiple parties. And as cool as it is to have a law that state AGs can enforce, it's way cooler to have a law that can be enforced by members of the public.

This is called a "private right of action" – the thing that lets impact litigation shops like Planned Parenthood, EFF, and the ACLU sue over violations of the public's rights. The business lobby hates the private right of action, because they think (correctly) that they can buy off enough regulators and enforcers to let them get away with murder (often literally), but they know they can't buy off every impact litigation shop and every member of the no-win/no-fee bar.

For decades, corporate America has tried to abolish the public's right to sue companies under any circumstances. That's why so many terms of service now feature "binding arbitration waivers" that deny you access to the courts, no matter how badly you are injured:

https://pluralistic.net/2025/10/27/shit-shack/#binding-arbitration

But long before Antonin Scalia made it legal to cram binding arbitration down your throat, corporate America was pumping out propaganda for "tort reform," spreading the story that greedy lawyers were ginning up baseless legal threats to extort settlements from hardworking entrepreneurs. These stories are 99.9% bullshit, including urban legends like the "McDonald's hot coffee" lawsuit:

https://pluralistic.net/2022/06/12/hot-coffee/#mcgeico

Ever since Reagan, corporate America has been on a 45-year winning streak. Nothing epitomizes the arrogance of these monsters more than the GW Bush administration's sneering references to "the reality-based community":

We're an empire now, and when we act, we create our own reality. And while you're studying that reality – judiciously, as you will – we'll act again, creating other new realities, which you can study too, and that's how things will sort out. We're history's actors…and you, all of you, will be left to just study what we do.

https://en.wikipedia.org/wiki/Reality-based_community

Giving Ellison, Bezos and Musk control over our media seems like the triumph of billionaires' efforts to "create their own reality," and indeed, for years, they've been able to gin up national panics over nothingburgers like "trans ideology," "woke" and "the immigration crisis."

But just lately, that reality-creation machine has started to break down. Despite taking over the press, locking every reality-based reporter out of the White House, and getting Musk, Zuck and Ellison to paint their algorithms spray-tan orange, people just fucking hate Trump. He is underwater on every single issue:

https://www.gelliottmorris.com/p/ahead-of-state-of-the-union-address

Despite the full-court press – from both the Dem and the GOP establishment – to deny the genocide in Gaza and paint anyone (especially Jews like me) who condemn the slaughter as "antisemites," Americans condemn Israel and are fully in the tank for Palestinians:

https://news.gallup.com/poll/702440/israelis-no-longer-ahead-americans-middle-east-sympathies.aspx

Despite throwing massive subsidies at coal and tying every available millstone around renewables' ankles before throwing all the solar panels and windmills into the sea, renewables are growing and – to Trump's great chagrin – oil companies can't find anyone to loan them the money they need to steal Venezuela's oil:

https://kschroeder.substack.com/p/earning-optimism-in-2026

Reality turns out to be surprisingly stubborn, and what's more, it has a pronounced left-wing bias. Putting little Huey (or whatever who cares) Ellison in charge of Warners will be bad news for the news, for media, for movies and TV, and for my neighbors in Burbank. But when it comes to shaping the media, Freddy (or whatever who cares) Ellison will continue to eat shit.

Democrats Should Launch a “Nuremberg Caucus” to Investigate the Crimes of the Trump Regime https://www.thenation.com/article/politics/democrats-nuremberg-caucus-trump-administration-crimes/

Two-thirds of Americans want term limits for Supreme Court justices https://www.gelliottmorris.com/p/two-thirds-of-americans-want-term

On the Democratic Party Style https://coreyrobin.com/2026/02/26/on-the-democratic-party-style/

Hannah Spencer gives DEFIANT victory speech as she wins Gorton & Denton for the Greens https://www.youtube.com/watch?v=KrzLQ294guI&t=473s

#25yrsago Mormon guide to overcoming masturbation https://web.archive.org/web/20071011023731/http://www.qrd.org/qrd/religion/judeochristian/protestantism/mormon/mormon-masturbation

#20yrsago Midnighters: YA horror trilogy mixes Lovecraft with adventure https://memex.craphound.com/2006/02/26/midnighters-ya-horror-trilogy-mixes-lovecraft-with-adventure/

#20yrsago RIP, Octavia Butler https://darkush.blogspot.com/2006/02/octavia-butler-died-saturday.html

#20yrsago Disney hiring “Intelligence Analyst” to review “open source media” https://web.archive.org/web/20060303165009/http://www.defensetech.org/archives/002199.html

#20yrsago MPAA exec can’t sell A-hole proposal to tech companies https://web.archive.org/web/20060325013506/http://lawgeek.typepad.com/lawgeek/2006/02/variety_mpaa_ca.html

#15yrsago Why are America’s largest corporations paying no tax? https://web.archive.org/web/20110226160552/https://thinkprogress.org/2011/02/26/main-street-tax-cheats/

#15yrsago Articulated cardboard Cthulhu https://web.archive.org/web/20110522204427/http://www.strode-college.ac.uk/teaching_teams/cardboard_catwalk/285

#15yrsago Freeman Dyson reviews Gleick’s book on information theory https://www.nybooks.com/articles/2011/03/10/how-we-know/?pagination=false

#15yrsago 3D printing with mashed potatatoes https://www.fabbaloo.com/2011/02/3d-printing-potatoes-with-the-rapman-html

#15yrsago TVOntario’s online archive, including Prisoners of Gravity! https://web.archive.org/web/20110226021403/https://archive.tvo.org/

#10yrsago _applyChinaLocationShift: In China, national security means that all the maps are wrong https://web.archive.org/web/20160227145529/http://www.travelandleisure.com/articles/digital-maps-skewed-china

#10yrsago Teaching kids about copyright: schools and fair use https://www.youtube.com/watch?v=hzqNKQbWTWc

#10yrsago Ghostwriter: Trump didn’t write “Art of the Deal,” he read it https://web.archive.org/web/20160229034618/http://www.deathandtaxesmag.com/264591/donald-trump-didnt-write-art-deal-tony-schwartz/

#10yrsago The biggest abortion lie of all: “They do it for the money” https://www.bloomberg.com/features/2016-abortion-business/

#10yrsago NHS junior doctors show kids what they do, kids demand better of Jeremy Hunt https://juniorjuniordoctors.tumblr.com/

#10yrsago Nissan yanks remote-access Leaf app — 4+ weeks after researchers report critical flaw https://www.theverge.com/2016/2/25/11116724/nissan-nissanconnect-app-hack-offline

#10yrsago Think you’re entitled to compensation after being wrongfully imprisoned in California? Nope. https://web.archive.org/web/20160229013042/http://modernluxury.com/san-francisco/story/the-crazy-injustice-of-denying-exonerated-prisoners-compensation

#10yrsago BC town votes to install imaginary GPS trackers in criminals https://web.archive.org/web/20160227114334/https://motherboard.vice.com/read/canadian-city-plans-to-track-offenders-with-technology-that-doesnt-even-exist-gps-implant-williams-lake

#10yrsago New Zealand’s Prime Minister: I’ll stay in TPP’s economic suicide-pact even if the USA pulls out https://www.techdirt.com/2016/02/26/new-zealand-says-laws-to-implement-tpp-will-be-passed-now-despite-us-uncertainties-wont-be-rolled-back-even-if-tpp-fails/

#10yrsago South Korean lawmakers stage filibuster to protest “anti-terror” bill, read from Little Brother https://memex.craphound.com/2016/02/26/south-korean-lawmakers-stage-filibuster-to-protest-anti-terror-bill-read-from-little-brother/

#5yrsago Privacy is not property https://pluralistic.net/2021/02/26/meaningful-zombies/#luxury-goods

#1yrago With Great Power Came No Responsibility https://pluralistic.net/2025/02/26/ursula-franklin/#franklinite

Victoria: Enshittification at Russell Books, Mar 4

https://www.eventbrite.ca/e/cory-doctorow-is-coming-to-victoria-tickets-1982091125914

Barcelona: Enshittification with Simona Levi/Xnet (Llibreria Finestres), Mar 20

https://www.llibreriafinestres.com/evento/cory-doctorow/

Berkeley: Bioneers keynote, Mar 27

https://conference.bioneers.org/

Montreal: Bronfman Lecture (McGill) Apr 10

https://www.eventbrite.ca/e/artificial-intelligence-the-ultimate-disrupter-tickets-1982706623885

Berlin: Re:publica, May 18-20

https://re-publica.com/de/news/rp26-sprecher-cory-doctorow

Berlin: Enshittification at Otherland Books, May 19

https://www.otherland-berlin.de/de/event-details/cory-doctorow.html

Hay-on-Wye: HowTheLightGetsIn, May 22-25

https://howthelightgetsin.org/festivals/hay/big-ideas-2

Making The Internet Suck Less (Thinking With Mitch Joel)

https://www.sixpixels.com/podcast/archives/making-the-internet-suck-less-with-cory-doctorow-twmj-1024/

Panopticon :3 (Trashfuture)

https://www.patreon.com/posts/panopticon-3-150395435

America's Enshittification is Canada's Opportunity (Do Not Pass Go)

https://www.donotpassgo.ca/p/americas-enshittification-is-canadas

Everything Wrong With the Internet and How to Fix It, with Tim Wu (Ezra Klein)

https://www.nytimes.com/2026/02/06/opinion/ezra-klein-podcast-doctorow-wu.html

"Enshittification: Why Everything Suddenly Got Worse and What to Do About It," Farrar, Straus, Giroux, October 7 2025

https://us.macmillan.com/books/9780374619329/enshittification/

"Picks and Shovels": a sequel to "Red Team Blues," about the heroic era of the PC, Tor Books (US), Head of Zeus (UK), February 2025 (https://us.macmillan.com/books/9781250865908/picksandshovels).

"The Bezzle": a sequel to "Red Team Blues," about prison-tech and other grifts, Tor Books (US), Head of Zeus (UK), February 2024 (thebezzle.org).

"The Lost Cause:" a solarpunk novel of hope in the climate emergency, Tor Books (US), Head of Zeus (UK), November 2023 (http://lost-cause.org).

"The Internet Con": A nonfiction book about interoperability and Big Tech (Verso) September 2023 (http://seizethemeansofcomputation.org). Signed copies at Book Soup (https://www.booksoup.com/book/9781804291245).

"Red Team Blues": "A grabby, compulsive thriller that will leave you knowing more about how the world works than you did before." Tor Books http://redteamblues.com.

"Chokepoint Capitalism: How to Beat Big Tech, Tame Big Content, and Get Artists Paid, with Rebecca Giblin", on how to unrig the markets for creative labor, Beacon Press/Scribe 2022 https://chokepointcapitalism.com

"Enshittification, Why Everything Suddenly Got Worse and What to Do About It" (the graphic novel), Firstsecond, 2026

"The Post-American Internet," a geopolitical sequel of sorts to Enshittification, Farrar, Straus and Giroux, 2027

"Unauthorized Bread": a middle-grades graphic novel adapted from my novella about refugees, toasters and DRM, FirstSecond, 2027

"The Memex Method," Farrar, Straus, Giroux, 2027

Today's top sources:

Currently writing: "The Post-American Internet," a sequel to "Enshittification," about the better world the rest of us get to have now that Trump has torched America (1022 words today, 40256 total)

"The Post-American Internet," a short book about internet policy in the age of Trumpism. PLANNING.

A Little Brother short story about DIY insulin PLANNING

This work – excluding any serialized fiction – is licensed under a Creative Commons Attribution 4.0 license. That means you can use it any way you like, including commercially, provided that you attribute it to me, Cory Doctorow, and include a link to pluralistic.net.

https://creativecommons.org/licenses/by/4.0/

Quotations and images are not included in this license; they are included either under a limitation or exception to copyright, or on the basis of a separate license. Please exercise caution.

Blog (no ads, tracking, or data-collection):

Newsletter (no ads, tracking, or data-collection):

https://pluralistic.net/plura-list

Mastodon (no ads, tracking, or data-collection):

Medium (no ads, paywalled):

Twitter (mass-scale, unrestricted, third-party surveillance and advertising):

Tumblr (mass-scale, unrestricted, third-party surveillance and advertising):

https://mostlysignssomeportents.tumblr.com/tagged/pluralistic

"When life gives you SARS, you make sarsaparilla" -Joey "Accordion Guy" DeVilla

READ CAREFULLY: By reading this, you agree, on behalf of your employer, to release me from all obligations and waivers arising from any and all NON-NEGOTIATED agreements, licenses, terms-of-service, shrinkwrap, clickwrap, browsewrap, confidentiality, non-disclosure, non-compete and acceptable use policies ("BOGUS AGREEMENTS") that I have entered into with your employer, its partners, licensors, agents and assigns, in perpetuity, without prejudice to my ongoing rights and privileges. You further represent that you have the authority to release me from any BOGUS AGREEMENTS on behalf of your employer.

ISSN: 3066-764X

Hovertext:

You should've seen the look on your face when you thought you'd gotten your mom killed!

Hovertext:

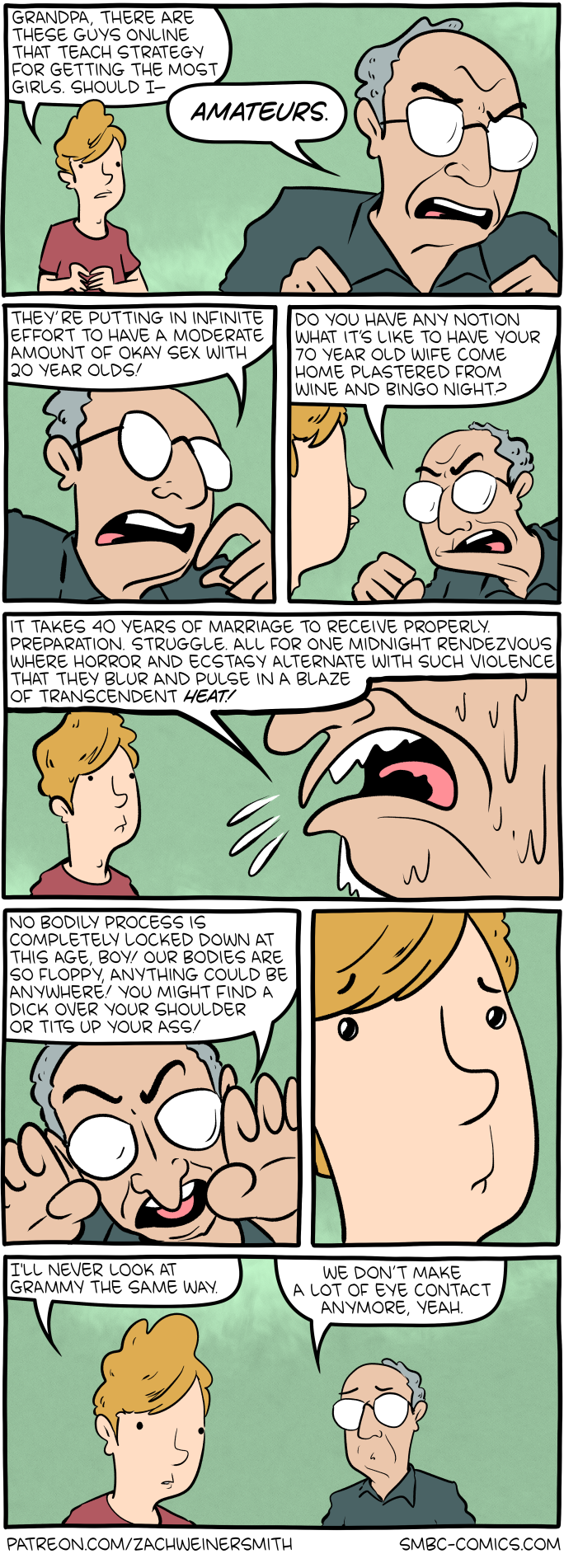

SMBC is just propaganda for longterm relationships.

It might look like something from the early days of the internet, with its aggressively grey color scheme and rectangles nested inside rectangles, but FPDS.gov is one of the most important resources for keeping tabs on what powerful spying tools U.S. government agencies are buying. It includes everything from phone hacking technology, to masses of location data, to more Palantir installations.

Or rather, it was an incredible tool and the basis for countless of my own investigations and others. Because on Wednesday, the government shut it down. Its replacement, another site called SAM.gov with Uncle Sam branding, frankly sucks, and makes it demonstrably harder to reliably find out what agencies, including Immigration and Customs Enforcement (ICE), are spending tax payers dollars on.