NVIDIA has become a giant, unhealthy rock on which the US markets — and to some extent the US economy — sits, representing 7-to-8% of the value of the market and a large percentage of the $400 billion in expected AI data center capex expected to be spent this year, which in turn made up for more GDP growth than all consumer spending combined.

I originally started writing this piece about something else entirely — the ridiculous Oracle deal, what the consequences of me being right might be, and a lot of ideas that I'll get to later, but I couldn't stop looking at what NVIDIA is doing.

To be clear, NVIDIA is insane, making 88% of its massive revenues from selling the distinct GPUs and associated server hardware to underpin the inference and training of Large Language Models, a market it effectively created by acquiring Mellanox for $6.9 billion in 2019, and its underlying hardware that allowed for the high-speed networking to connect massive banks of servers and GPUs together, a deal now under investigation by China's antitrust authorities.

Since 2023, NVIDIA has made an astonishing amount of money from its data center vertical, going from making $47 billion in the entirety of their Fiscal Year 2023 to making $41.1 billion in its last quarterly earnings alone.

What's even more remarkable is how little money anyone is making as a result, with the combined revenues of the entire generative AI industry unlikely to cross $40 billion this year, even when you include companies like AI compute company CoreWeave, which expects to make a little over $5 billion or so this year, though most of that revenue comes from Microsoft, OpenAI (funded by Microsoft and Google, who are paying CoreWeave to provide compute to OpenAI, despite OpenAI already being a client of CoreWeave, both under Microsoft and in their own name)...and now NVIDIA itself, which has now agreed to buy $6.3 billion of any unsold cloud compute through, I believe, the next four years.

Hearing about this deal made me curious.

Why is NVIDIA acting as a backstop to CoreWeave? And why are they paying to rent back thousands of its GPUs for $1.5 billion over four years from Lambda, another AI compute company it invested in?

The answer is simple: NVIDIA is effectively incubating its own customers, creating the contracts necessary for them to raise debt to buy GPUs — from NVIDIA, of course — which can, in turn, be used as collateral for further loans to buy even more GPUs. These compute contracts are used by AI compute companies as a form of collateral — proof of revenue to reassure creditors that they're good for the money so that they can continue to raise mountains of debt to build more data centers to fill with more GPUs from NVIDIA.

This has also created demand for companies like Dell and Supermicro, companies that accounted for a combined 39% of NVIDIA's most recent quarterly revenues. Dell and Supermicro buy GPUs sold by NVIDIA and build the server architecture around them necessary to provide AI compute, reselling them to companies like CoreWeave and Lambda, who also buy GPUs of their own and have preferential access from NVIDIA.

You'll be shocked to hear that NVIDIA also invested in both CoreWeave and Lambda, that Supermicro also invested in Lambda, and that Lambda also gets its server hardware from Supermicro.

While this is the kind of merciless, unstoppable capitalism that has made Jensen Huang such a success, there's an underlying problem — that these companies become burdened with massive debt, used to send money to NVIDIA, Supermicro (an AI server/architecture reseller), and Dell (another reseller that works directly with CoreWeave), and there doesn't actually appear to be mass market demand for AI compute, other than the voracious hunger to build more of it.

In a thorough review of just about everything ever written about them, I found a worrying pattern within the three major neoclouds (CoreWeave, Lambda, and Nebius): a lack of any real revenue outside of Microsoft, OpenAI, Meta, Amazon, and of course NVIDIA itself, and a growing pile of debt raised in expectation of demand that I don't believe will ever arrive.

To make matters worse, I've also found compelling evidence that all three of these companies lack the capacity to actually serve massive contracts like OpenAI's $11.9 billion deal with CoreWeave (and an additional $4 billion added a few months later), or Nebius' $17.4 billion deal with Microsoft, both of which were used to raise debt for each company.

On some level, NVIDIA's Neocloud play was genius, creating massive demand for its own GPUs, both directly and through resellers, and creating competition with big tech firms like Microsoft's Azure Cloud and Amazon Web Services, suppressing prices in cloud compute and forcing them to buy more GPUs to compete with CoreWeave's imaginary scale.

The problem is that there is no real demand outside of big tech's own alleged need for compute. Across the board, CoreWeave, Nebius and Lambda have similar clients, with the majority of CoreWeave's revenue coming from companies offering compute to OpenAI or NVIDIA's own "research" compute.

Neoclouds exist as an outgrowth of NVIDIA, taking on debt using GPUs as collateral, which they use to buy more GPUs, which they then use as collateral along with the compute contracts they sign with either OpenAI, Microsoft, Amazon or Google.

Beneath the surface of the AI "revolution" lies a dirty secret: that most of the money is one of four companies feeding money to a company incubated by NVIDIA specifically to buy GPUs and their associated hardware.

I will add that NVIDIA also invested in Crusoe, the company building OpenAI's data center operation out in Abilene Texas. The reason I haven’t included it in this larger piece is because it’s currently focused on literally one client: Oracle, serving one other client, OpenAI.

These Neoclouds are entirely dependent on a continual flow of private credit from firms like Goldman Sachs (Nebius, CoreWeave, Lambda for its IPO), JPMorgan (Lambda, Crusoe, CoreWeave), and Blackstone (Lambda, CoreWeave), who have in a very real sense created an entire debt-based infrastructure to feed billions of dollars directly to NVIDIA, all in the name of an AI revolution that's yet to arrive.

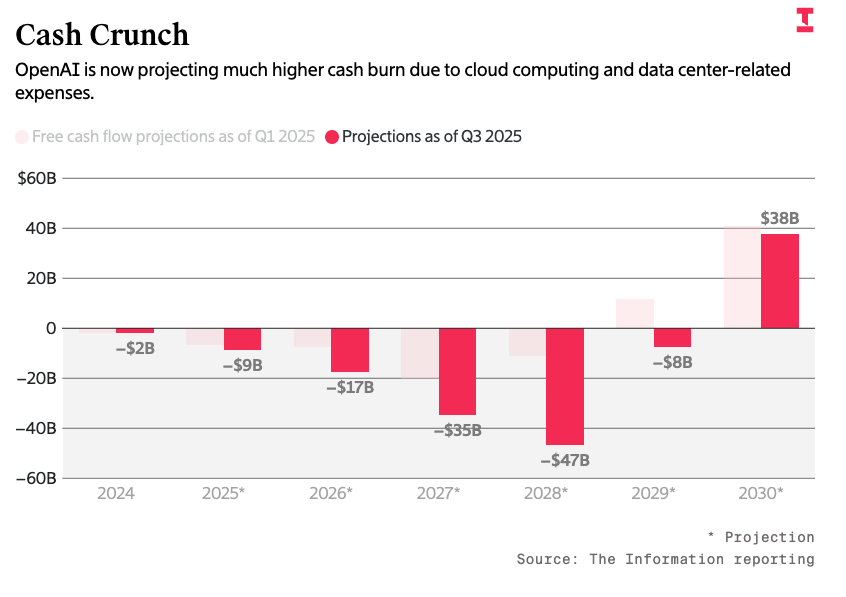

Those billions — an estimated $50 billion a quarter for the last three quarters at least — will eventually have the expectation of some sort of return, yet every Neocloud is a gigantic money loser, with CoreWeave burning $300 million in the last quarter with expectations to spend more than $20 billion in capital expenditures in 2025 alone.

At some point the lack of real money in these companies will make them unable to pay their ruinous debt, and with NVIDIA's growth already slowing, I think we're watching a private credit bubble grow with no way for any of the money to escape.

I'm not sure where it'll end, but it's not going to be pretty.

Let's begin.